This is a follow up to my previous blog post, “Windows and Legacy Python: Scaling up With Celery”. In it, I talked about how I modified an outage forecasting system to run as a distributed system using Celery 3.1, working around the limitations of integrating with PSSE 33, Siemens’ Power System Simulation for Engineers tool. Those limitations being that I was forced to use Python 2.7 and run on Windows.

Well, good news! We’ve started the process of migrating to PSSE 35.3 and with it comes an upgrade to Python 3.9.7. Finally, a version of Python that hasn’t been end-of-life since before we started using it. Now, I just had to address an issue that had been looming since we chose Celery as our distributed task queue in the first place. Celery dropped support for Windows in 2016 with Celery 4.0.

Before I spent time migrating to another distributed task queue, like Dramatiq, I decided to research what dropping support for Windows actually meant.

Concurrency

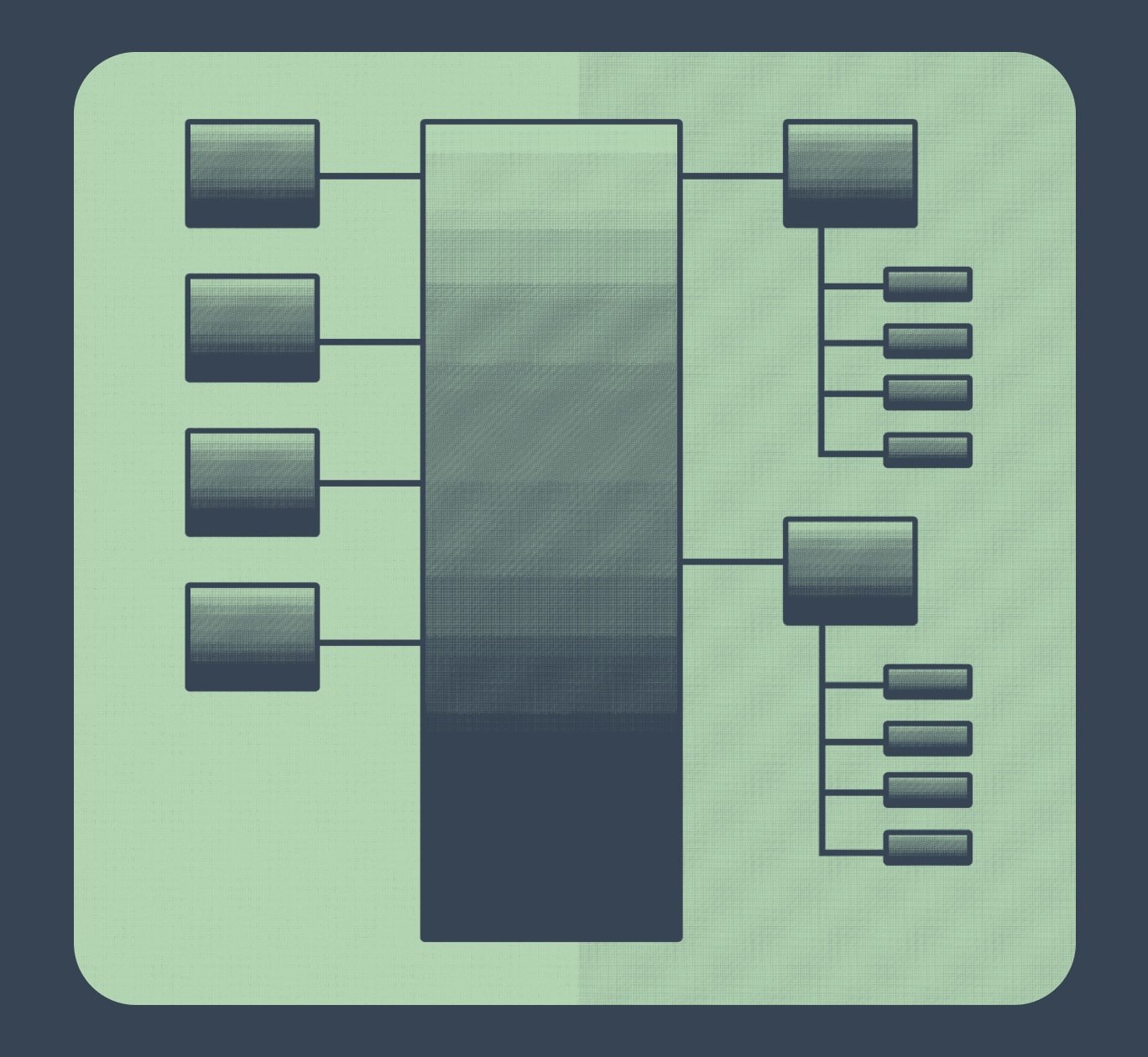

There are several concurrency options in Celery.

Prefork, the default option, works by forking the worker process to create child processes that make up the execution pool. The worker process handles coordination with the broker and the execution pool while the execution pool executes tasks.

The threads option works similarly to the prefork option, except the execution pool is made up of spawned threads instead of forked processes. This sounds good until you realize that only one thread can execute at a time because of Python’s Global Interpreter Lock (or GIL).

The eventlet and gevent options use greenlets, lightweight coroutines that offer high concurrency with low overhead for I/O heavy tasks.

The solo option works by executing tasks in the worker process itself instead of creating an execution pool. It only works on one task at a time and long-running tasks can even run into broker visibility timeout issues. You can work around the lack of concurrency by starting multiple solo pool workers.

Prefork is the default option for a reason. All of the options (except solo) offer concurrency, but only prefork offers parallelism, so it’s the only option suitable for running multiple CPU heavy tasks at the same time. It’s also the option that no longer works in Windows and that’s because Windows does not support forking, only spawning. Before Celery 4, the Billiard module that handles multiprocessing for Celery would either fork or spawn processes depending on the operating system. That got removed which ended support for the prefork pool on Windows. There was a hack to get around this by setting the FORKED_BY_MULTIPROCESSING environment variable, but it no longer works.

The Outage Forecast System

Now that I knew what a lack of support for Windows meant, I had a decision to make. I could migrate to another distributed task queue or I could continue using Celery with limited concurrency options.

I decided to evaluate the type of Celery tasks we run and see if they could be run with minimal impact using the threads or solo concurrency options. The first type of Celery task we run generates a custom outage forecast and is kicked off by the UI. These are CPU heavy tasks that can take hours to run. However, it’s incredibly unlikely that more than one or two of these are running at the same time and we currently run workers for them on four different machines. I didn’t see running these using the threads option as an issue.

The second type of Celery task we run uses an application called TARA to run outage reliability analysis. TARA is incredibly CPU heavy and we run hundreds of these tasks in parallel at least once a week. This seemed like it could be an issue, but the Python code in the Celery task wasn’t actually doing much besides using the subprocess module to run TARA. I didn’t think there’d be an issue using the threads option, but I needed to test it to make sure.

Testing

I created a small celery app and two test scripts to make sure Celery worked on Windows the way I thought it did.

import subprocess

from celery import Celery

from celery_app_env import REDIS_HOST, TARA_EXE_PATH

celery_app = Celery("tasks", broker=REDIS_HOST, backend=REDIS_HOST)

def calculate_nth_fibonacci_number(n):

return (n if n <= 1 else calculate_fibonacci_number(n-1)

+ calculate_fibonacci_number(n-2))

@app.task

def get_nth_fibonacci_number(n):

return calculate_nth_fibonacci_number(n)

@app.task

def run_tara(directive_file_path):

subprocess.call([TARA_EXE_PATH, "%run " + directive_file_path])

celery -A tasks worker --loglevel=info

Starts a worker with a prefork execution pool equal to the number of logical processors on the machine. On Windows, if you attempt to run a task you see the task get received by the worker, but it immediately throws a ValueError exception.

celery -A tasks worker --pool=solo --loglevel=info

Starts a solo worker.

celery -A tasks worker --pool=threads --concurrency=5 --loglevel=info

Starts a worker with an execution pool of 5 threads.

from tasks import get_nth_fibonacci_number

task = get_nth_fibonacci_number.delay(40)

result = task.get(timeout=10000)

print(result)

The first set of tests I ran were to demonstrate the GIL’s bottleneck on CPU-heavy tasks. I created a task that calculated the nth number of the fibonacci sequence using a recursive function and chose to test against the 40th number of the sequence which took roughly 25 seconds to calculate on the server.

With one solo worker called 3 times at once (in separate console windows), the first task finished in 25 seconds, the second in 50 seconds, and the third in 75 seconds.

With three solo workers called 3 times at once they all finished in 25 seconds.

With one worker (with an execution pool of 5 threads) called 3 times at once they all finished in 75 seconds. Calling 5 times at once increased the time to 125 seconds.

from tasks import run_tara

task = run_tara.delay(directive_file_path)

result = task.get(timeout=10000)

The second set of tests I ran were to verify the GIL was not a bottleneck when running TARA using the subprocess module. I created a task that used a directive file to run outage reliability analysis in TARA which took roughly 375 seconds to run on the server.

With three solo workers called 3 times at once they all finished in 375-380 seconds.

With one worker (with a pool of 5 threads) called 3 times at once they all finished in 375-380 seconds, similar timing to running TARA directly on the server. Calling 5 times at once increased the time to 385-400 seconds, a marginal increase.

Conclusion

After doing research and testing, I’m comfortable continuing to use Celery on Windows after the upgrade to Python 3.9.7. For now, at least. The impact of moving from processes to threads should be minimal for the tasks we currently use Celery for. If that changes or future versions of Celery no longer work properly on Windows, I’ll have to look at other distributed task queues like Dramatiq.

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.