The other night while having the geek dinner I was speaking with a colleague that said they had read about ParallelFX on my blog, but wasn’t really sure what use they had for it in their environment. Well, it isn’t always about high performance computing and matrix multiplication, sometimes it is about something as simple as sorting strings or making web service calls. So, you know what, I decided to sort some strings. Well, there is an implementation of quicksort on the array class, but there is no parallel implementation of it. So, I first decided that I needed to implement a parallel quicksort, which is actually quite easy. Since quicksort is a recursive algorithm that forks, it is almost as if it is built for being paralleled.

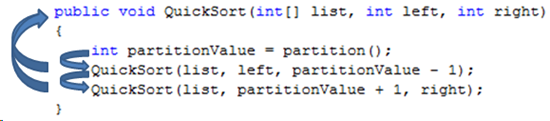

If you don’t remember exactly how the quicksort algorithm works, here is a partial listing that shows the main QuickSort method. As you can see we start with the partition method, which picks a value and pushes all values above that values to the right, and then pushes everything below it to the left. Then we just recursively call Quicksort for both sides and continue on getting smaller and smaller until everything is sorted. All we have to do is make these two recursive QuickSort calls in parallel and the algorithm just works. How easy is that?

So, how would we do that with ParallelFX? Well, my first thought was to use Tasks, which are objects that can be created through ParallelFX that allow you to run Action delegates. For example, if I had a method that I needed to call which was named "DoSomething" I could run it on a separate thread like this:

var task = System.Threading.Tasks.Task.Create(() => DoSomething());

But then I would have had to fire off two tasks and then write code to wait until they finished, but it turns out that ParallelFX already provides an easy way to do this. It is called "Parallel.Do". Using "Parallel.Do" the above code would look like this:

System.Threading.Parallel.Do(() => DoSomething());

But the best part comes in when you do this:

System.Threading.Parallel.Do(() => DoSomething(),

() => DoSomethingElse());

This allows us to pass as many actions as we like into this method, and it doesn’t return until they are all done. Exactly what we needed. So the quicksort above will now look like this (I also made it generic):

public void QuickSort<T>(T[] list, int left, int right)

where T : IComparable<T>

{

int partitionIndex = partition(list, left, right);

Parallel.Do(

() => QuickSort(list, left, partitionIndex - 1),

() => QuickSort(list, partitionIndex + 1, right));

}

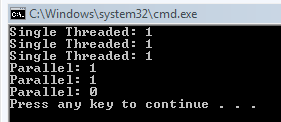

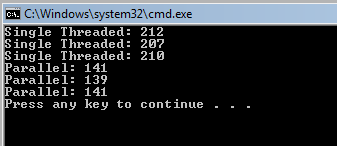

Parallel.Do also tries to reuse current threads, so (by default) you’ll never use up more threads than the number of processors in your system. So, what do the numbers look like when sorting arrays of strings? Well, I created code that would randomly generate strings from 10 to 30 in length and then I populated 6 different arrays with 1000 of them. I then ran the single threaded QuickSort three times and then I run the ParallelQuicksort three times. With 1000 items, here is the number of milliseconds that the sorts took.

So, obviously with 1000 items the differences are negligible. So, lets bump this up to 10,000 items.

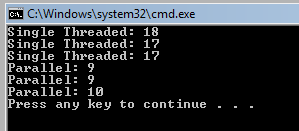

At 10,000 items we are already starting to see some significant differences. So, lets bump it up one more time to 100,000 items.

So, there you have it. You can see a pretty good performance gain from this algorithm, but with the QuickSort algorithm it is extremely important that you pick a good pivot value so that you split up your data set as evenly as possible when you first start off. The algorithm that I am using uses the "median of 3" method of picking a pivot values. It takes the first item, the last item, and the middle item in the array, and then picks the one in the middle. If you are dealing with large sets of data, and considering the fact that we are running this in parallel, it may make sense to spend even more time trying to find a good pivot point.

So, now that I showed you that you can sort a bunch of strings faster (or integers, or dates, etc…), how about making long running web service calls? If I have 10 web service calls that I need to make, and I only have two processors in my box then ParallelFx will only use 2 threads, right? That would be more efficient, but nowhere near as efficient as it could be. And yes, that is true, but that is just the default, we can go a step further with this and tell ParallelFX how many threads to use.

Action[] actions = { CallWebservice1(),

CallWebservice2(),

CallWebservice3(),

CallWebservice4(),

CallWebservice5()};

//1 for MinThreads, array length for IdealThreads

var policy = new TaskManagerPolicy(1, actions.Length);

TaskManager manager = new TaskManager(policy);

Parallel.Do(actions, manager, TaskCreationOptions.None);

Now this is a bit of a contrived example, because you have to know ahead of time what all of your calls are going to be. You cannot easily pass information into any of these calls and I’ll show you why. Lets say we have some code like this, which passes in a list of cities:

string[] names = { "Richmond, VA", "Washington, DC", "Boston, MA",

"Los Angeles CA", "Las Vegas, NV", "Seattle, WA" };

Action[] actions = new Action[names.Length];

float[] results = new float[names.Length];

for (int i = 0; i < names.Length; i++)

{

actions[i] = () => CallWebService(names[i]);

}

//1 for MinThreads, array length for IdealThreads

var policy = new TaskManagerPolicy(1, names.Length);

TaskManager manager = new TaskManager(policy);

Parallel.Do(actions, manager, TaskCreationOptions.None);

So, you can see that we have "CallWebService" and we are passing in "names[i]". When "Parallel.Do" is actually called we end up with an IndexOutOfRange exception! Why is that? Well, you can see that we are putting our call to "CallWebService" inside of a parameterless lambda. This creates a closure which binds to the surrounding local variables, and this includes the array index variable. So, by the time we call "Parallel.Do" the value for "i" is now set to one past the length of our array. For performing an operation like this you are going to be better off using "AsParallel" with "ForAll" like this:

names.AsParallel(names.Length).ForAll(name => CallWebService(name));

What is happening here is that we are using "AsParallel" to get an IParallelEnumerable, and we are passing in names.Length to the "DegreeOfParallelism" parameter. This tells our enumerable to use the same number of threads as there are items in our array. If we need to get results back from this, then we can call "Select" instead of "ForAll". We also need to maintain order so that our results can be coorelated with our data. (This may or may not be important to you)

var results = names.AsParallel

(ParallelQueryOptions.PreserveOrdering, names.Length)

.Select(name => CallWebService(name));

So, here you can see that we are passing the "PreserveOrdering" parameter as well as the number of threads, and then we call Select passing in our array item and the results are returned to our "results" variable as another IParallelEnumerable.

So, now you can see how you would use ParallelFX to operate on an array of values, but this could easily be used for anything that supports IEnumerable. You have also seen an example of passing data to a web service, but you could also use this for long running database calls or any other long running process. Hopefully you have found this interesting and it helps you out.

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.

Nice examples! Could you by any chance post the complete sources?

I didn’t write the Quicksort code, I pulled it from another site, but I will try and put it up with a link to the original site (which was in Java). I am in Vegas now for Mix, but I’ll try to look at it this weekend.