This weekend, after the Raleigh Code Camp, James Avery put on an Open Spaces conference called Shadow Camp. It was a small group of people who came together on Sunday morning to discuss the topic of complexity in software. While people were discussing topics for the different slots, one of the topics which was suggested by Corey Haines was “Emergent Design”. The idea of Emergent Design is not a new one in the agile world, but discussing this in relation to software complexity led to an interesting discussion on entropy in software.

For many people the idea that complexity is emergent may sound like an obvious statement. We all know that from the second law of thermodynamics that all systems trend toward chaos, but we think of those systems as uncontrolled natural systems. We don’t think of our software as a natural system with forces that are out of our control which changes and evolves on its own. But our software is constantly changing and evolving in ways that are more than the sum of the changes we put into it. Every time a developer touches a piece of code, the design of the system becomes more divergent from the original design.

Without someone to constantly guide the design at a high level, it will slowly descend into chaos. Small changes in different places in the application will combine to form larger changes which will affect larger swaths of the application. So what do we do? Do we constantly keep an eye on the architecture of our systems? Well, the short answer is yes, but the long answer is that we can make decisions in our architectures that will allow us to minimize the impact of entropy.

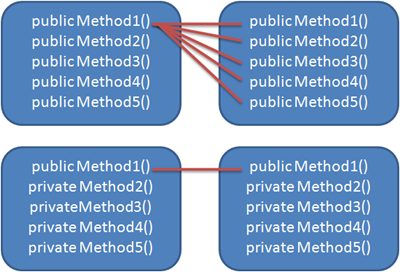

Complexity in software is all about interactions. Now obviously interactions must happen, if they didn’t then the software couldn’t do anything. But complexity isn’t just simply about the surface of your software and the number of methods on objects, it is about the combination of the number of methods along with the number of other methods which can call that method. Let’s say that we have two classes and each class has five public methods. Then we have another two classes, each with 1 public method, and 4 private methods.

Judging from what we said above, you might think that we could just say 5 * 5 and figure that we have 25 possible interactions on the first action, and one possible interaction on the second example. But the reality is much worse. In the first example single methods could call multiple other methods, and methods within one class could call other methods from the same class. Now you may be saying to yourself, what does it matter if methods in a class call methods in the same class? If they are in the same class, can’t we just change them? No we can’t, if we have exposed them publicly we have created a contract on that method. If we decide to change this method, then we have to create a new method to support the new contract.

What all of this means is that as you expose more and more methods from your classes the potential for complexity increases exponentially. As you add more and more classes, the numbers just start increasing at a startling rate. So, lets just assume that our simple 5 * 5 numbers above are accurate. If we had 3 classes, then this turns from 25 into 125. If we have 5 classes then we are now at a staggering 3125 possible interactions. If we stick with the 5 method number and go with 25 classes, which is still a fairly small application, then the number become almost incomprehensible at 2.98023224 × 10^17. This potential for interaction is what allows your so beautifully architected application to slowly descend into a ball of chaos if these interactions aren’t constantly managed.

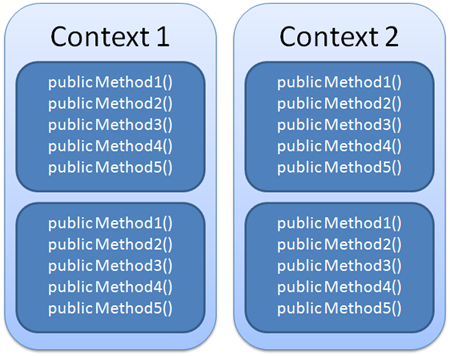

One of the tools that you can use to manage this complexity is partitioning. Divide up your application into chunks, and then manage the interactions between the chunks through strictly defined interfaces. In DDD this is referred to as a bounded context and without them, not only do you have ever increasing complexity, but it gets harder and harder to manage the complexity. The reason for this is that as you add more and more classes to your application you have to consider them when designing new classes.

Even though we have five public methods on each class, we have 25 interactions in each context with only minimal interactions between contexts. In extremely large applications, this can be one of the only ways in which to greatly reduce the possible number of interactions.

At a lower level another approach you can take is to try and make methods private or protected. But be careful with this approach! Going overboard can cause your application to be overly rigid, but you also have to remember that every method you expose is a contract that you have tied yourself to in the future, especially if your class is exposed outside of your module.

Yet another approach you can take is to implement the Principle of Least Knowledge (also referred to as the Law of Demeter). This basically says that an object should only interact with methods on objects that it is directly holding, and should not call through an object to another object. For example:

public void Method(SomeObject obj){

obj.OtherObject.MethodOnOtherObject(); //don't do this

}

By make these kinds of calls you are instantly exposing the number of other classes that your class is interacting with directly. Instead the call to “MethodOnOtherObject” should be wrapped in a method on “SomeObject” that does the interaction on behalf of this method. So, something like this:

public void Method(SomeObject obj){

obj.PerformAction();

}

Anyway way you can find which will help to reduce the coupling between your objects will help you refactor later to reduce the complexity that is always going to bleed into your application. Managing complexity, and therefore keeping our applications agile is our primary job as architects and developers. Next time you are designing an application, class, or just a method ask yourself if you are doing everything you can in order to manage the complexity.

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.

Great post Justin. Did anyone bring up the way dependency injection frameworks help manage this complexity by externalizing dependencies, reducing coupling, programming to interfaces instead of implementations, etc.?

Neal Ford mentions the The Law of Demeter in [i]The Productive Programmer[/i]. It’s full of great tips on programmer productivity, including a section on avoiding what he calls "Accidental Complexity".

@David Yep, people definitely brought this up. In fact, Nate Kohari was there (the guy who wrote Ninject), so we had some good long healthy discussion about using DI to help manage complexity.

Great article, Justin!

Really puts into words what I’ve been feeling for several years! 🙂

I also liked your tips on organizing and "least-knowledge" principle.

Corey and I met at one of my first programming gigs back in 1999/2000. While we didn’t work directly together, we hung out and chatted. He’s a good guy, and I am glad to see he’s still stirring up some good debate. 🙂 He was always good for that.

Chris

obj.PerformAction();

You have merely moved the responsibility for handling the coupling between objects from the calling to the called object.

No reduction in complexity there – you’ve simply hidden it with sleight of hand from the code under discussion, and put it somewhere else.