On March 14th, four Simple Thread team members attended Richmond’s first ever Data Science Summit. The Summit was a full day conference with keynotes, multiple tracks, 30+ speakers, 500 attendees and lots of good lessons to take back to our team. In addition to attending the event, your humble correspondent served on the planning committee and our new UX Lead Spencer Hansen facilitated a panel on Understanding Data Through Visualization.

We know we’re not the most unbiased source, but as software engineers we found the day helpful as we navigate the data science landscape. What follows are some takeaways from what we’re sure will be a marquee tech event in Virginia for years to come.

Peak Data

Scouring the internet on Big Data, Machine Learning and Artificial Intelligence and you will find unhelpful aphorisms like “data is the new oil” and premonitions that we are (yet again many times since the transistor) on the brink of a revolution like the one which the spinning jenny helped set off nearly 250 years ago.

But it is more complicated than that. Machine Learning and Artificial Intelligence has been something talked about and attempted for decades and obviously is coming closer to reality. Still, we’re in something of an arrested development. Gartner’s Hype Cycle has been dangling machine learning/AI/Deep Learning (whatever they are calling it this year) over the edge for a few years now. Heck, they’ve even had the audacity to move it backwards on the cycle.

Regardless, in closing out the day, Capital One’s Vice President of Card Services Chris Peterson shared some sage advice from an early manager from his formative days at Intel that echo to the present.

https://twitter.com/BecomingDataSci/status/1106288756611575810

Remember that whatever tools are available to us now that weren’t available to semiconductor engineers 30 years ago — the scientific process still holds true.

Proceed With Caution

One of the best quotes of the event came off the cuff accompanied by a beer at the end of the day. When I was joking with a friend about how “data is the new oil” with accompanying eye roll, they replied with “I believe it, look how many toxic data spills we’ve already had.”

Data has the potential to be in many ways just like oil, but worse in that a drum of crude can only be spilled once. Not ironically as I write this, smoke-born carcinogens from a petrochemical refinery fire are impacting the lives of 6.2 million people in Houston, Texas. While data might have a less ecological disaster footprint, for people it can still be disastrous.

Beyond the bald-faced greed, neglect, and malfeasance of how people handle and use data there’s also this powerful quote from Susan Etlinger about even our best intentions.

Can a machine be racist or sexist? Renee @BecomingDataSci describes at what points in the process bias can be introduced in a model and how to mitigate this. Great examples across different types of models and applications #RVAtechsummit19 @rvatechcouncil pic.twitter.com/yLMYumOLwB

— Lauren Downey (@laurenmcdowney) March 14, 2019

This was addressed at length in Renée Teate’s talk on “Can a Machine be Racist or Sexist?” and should be required reading for anyone supporting or managing data science teams.

While there’s a lot of hand-wringing on sites like Forbes about how data scientists ought to to be better ethicists, the same can (and should!) be asked of the surrounding team as well as direct and executive management.

The Importance of Explainability

One of the more thought provoking talks of the day, came from Adam Wenschel, who in the 1990s started his career in AI with DARPA. Wenschel talked extensively about the dangers of data, particularly with “black box” solutions which engender far less accountability and therefore result in solutions without transparency and accountability. This is why Wenschel urged explainability or interpretability in all machine learning models. Many ML/AI techniques, particularly around neural network models and deep learning have a difficult time when it comes to being able to understand how a model reached a particular prediction or conclusion.

Wenschel cited an example of an autonomous driving model which interpreted its environment in a way that wasn’t robust and wasn’t easily interpretable. Some researchers found that the model was identifying things like speed limit signs using only a few key pixels. Through simple sticker vandalism on a stop sign, the algorithm would think it was seeing a 45MPH speed limit sign. Obviously, this is an extreme example, but if we can’t understand why a model is doing what it is doing, then our model might be much less robust than we assume it to be.

It’s not a cop-out, but most of the attending data scientists were not employed by the “move fast break things” culture of the Valley, but instead by regulated industries like healthcare, manufacturing, pharmaceuticals and finance. There is hope that more explainability and accountability will be part of whatever gets built here in the Richmond region.

Cooks Building Microwaves

Beyond struggling with how to train machines and do it ethically, there are also significant skills and team composition problems that came up repeatedly over the course of the Summit. This wound up being the central focus of Spencer’s panel … and probably why it was packed and there were lots of questions from the audience.

Standing room only for Data Visualization breakout session! #RVATECH #RVATECHSUMMIT19 #RVA #TodayAtMarathon #MarathonTwitterTakeover pic.twitter.com/eudtQTlc7T

— Marathon Consulting (@MarathonIT) March 14, 2019

My thesis? Many businesses are reacting to the needs of data science without the proper proactive approach and we’re wasting effort. The more we follow these bad patterns, the quicker we’ll slide down that Gartner Hype Cycle.

How then are we to build, deploy and integrate machine learning and the requisite skills into larger initiatives? When it comes to building data science practices inside an enterprise, what are the right questions to ask and the right ways to answer them?

The simple answer is that in a vacuum neither product owners, nor UX designers, nor software engineers nor data scientists seem to know by themselves. In her talk Machine Learning at the Edge, while discussing the power of putting right-sized ML models onto a device, Skafos.ai‘s Miriam Friedel explained how Skafos sees the composition of teams and skills around machine learning as a major problem and a reason for their early success.

This, Friedel cited can be explained by Cassie Kozyrkov’s excellent metaphor in her article, Why Businesses Fail at Machine Learning. The problem, explains Kozyrkov is that engineers who design appliances don’t automatically know how to construct actual recipes, don’t understand the context of foodways of cultures and don’t offer advice on how to manage a kitchen brigade. Instead,

… all those machine learning courses and textbooks are about how to build ovens (and microwaves, blenders, toasters, kettles… the kitchen sink!) from scratch, not how to cook things and innovate with recipes.

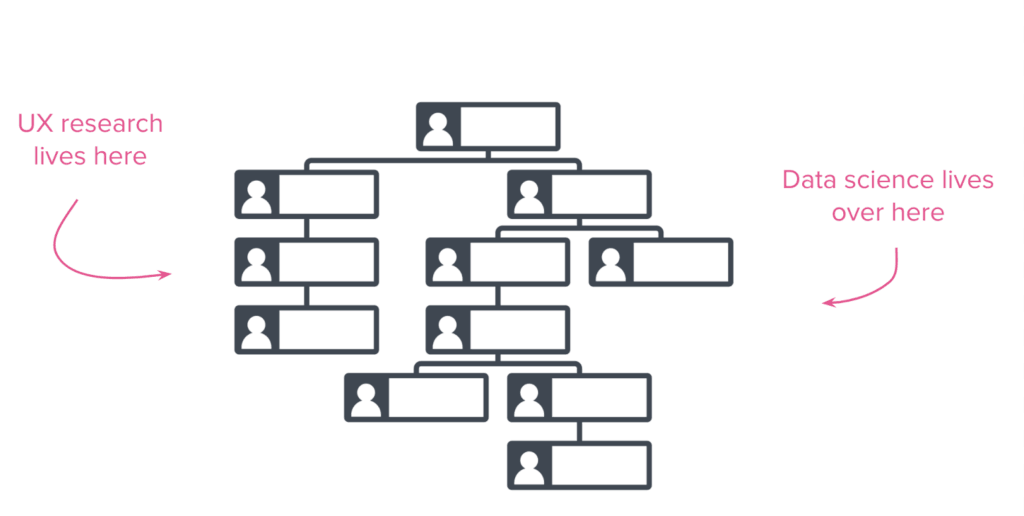

Part of the problem comes from who is framing the question. Part comes from data scientists answering unasked questions. One hack we expect will see more adoption over the next few years is pairing up UX Designers and Data Scientists instead of keeping them in separate silos like this:

While many businesses might not be doing enough of this yet, these pairings have the potential to unlock significant value of research, experience and data in revelatory ways. Some tech giants are already employing this practice routinely to great results. Case studies for this can already be found at UserTesting.com, AirBnB and Spotify. These companies are already finding great value from this practice.

Text Representations for Deep Learning

One of our favorite breakouts of the day was from Zak Brown. Brown talked about the practical problems with mapping text into a format to make it useful for deep learning and how those problems are solved by algorithms like Word2vec. Brown then talked about how in traditional NLP models represented words as discrete symbols, but Word2vec maps words into vectors with an arbitrary number of dimensions. This allows it to semantically map similar words near each other in that space by using the proximity of the words. So if the word apple and orange have similar contexts to each other, then they are probably similar concepts.

Brown then went on to talk about an algorithm called FastText created by Facebook which takes this concept one step further and applies it to not just whole words, but parts of words.

As software engineers, the idea that we can come up with novel ways of representing any kind of data as vectors so that we can mathematically reason about its attributes is a fascinating and endless topic. We can’t wait to find ways to use this in current and future projects!

Other Interesting Bits …

Computer Vision & Object Detection — This talk from NVIDIA showed how easy it can be to apply some of the tools available to data scientists. It talked a little bit about deep learning models for image classification, some of the pre-trained models in existence, then dove into how you can use one of these models to do image classification in both still images and video in the context of Tesla dashcam videos. Fascinating stuff!

Everything But — Another interesting takeaway was the “kitchen sink” approach mentioned in a number of different talks. As the name implies, this means throwing all your data into a statistical regression model. This of course leads to problems of overfitting and wasted compute time. It was cool though that researchers use this as a starting point of sorts due to the simplicity; even an application engineers like much of our team can track the process and progress of what’s happening at that level.

The Struggle Is Real — One of the interesting take aways for us was seeing that data science deals with a similar set of problems that application engineers also face from both technical and personnel perspectives. For example, how to explain a complex concept, vetting potential hires, self taught practictioners versus those who went through traditional education, ethics, the tradeoffs of limited compute power. This is probably a more ‘of course’ thought than anything … after all it’s just a subfield of technology. Still I suppose I went into the conference with slightly different expectations.

While this was just the first year for this event, we learned a lot about how application engineering and data science face similar but distinct challenges. We look forward to next year’s and hope to you can join us for it in 2020!

Additionally, If this compelling to you or your team, we’d highly recommend attending Tom Tom’s Applied Machine Learning conference in April where lots of these topics and more will be covered!

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.