It pretty much goes without saying that if you are building a public facing website these days you are probably using a ridiculous amount of JavaScript. And it is also likely that most of the JavaScript is in the form of libraries that you didn’t write and you don’t maintain. But even if you aren’t maintaining those libraries, you are still responsible for pushing them all down to your users. And so you can get into the situation where you have either hundreds of kilobytes of JavaScript or you just end up with a ton of tiny script files. Both of these can really put a damper on the amount of time that your site initially loads for your user.

Fortunately for us there are several solutions to the problem of slow loading JavaScript. One is to try and load most of your libraries from content delivery networks (CDN) provided by companies like Google and Microsoft. A second is to employ a CDN of your own like Amazon’s CloudFront. But no matter what you are doing to speed up your the delivery of your JavaScript, it is absolutely imperative that you do three things:

- Combine your JavaScript files: Concatenate all of your JavaScript files into a single file so that the browser only has to make one request to download your scripts.

- Minify your JavaScript files: Perform some optimizations on your JavaScript to remove whitespace, shorten variable names, and in some instances even perform some static analysis to optimize statements or remove unused code.

- Compress your JavaScript: Enabled gzip compression so that users that have browsers which support compression will receive a smaller file.

Now this may sound like a lot of work, but thankfully people like my friend Dave Ward have already solved the problem of easily combining and minifying our JavaScript files in a pretty easy way. However, I was looking at one of my favorite JavaScript libraries, SyntaxHighlighter, and I was thinking that it was just an absolutely huge amount of files that you had to import in order to use it. SyntaxHighlighter has hosted versions of its files, and so wouldn’t it be cool if I could just pull those hosted versions, along with my other javascript and then combine, minify, and then just push all of that up to my CDN?

So I started thinking, wouldn’t it be useful if I just had a utility that I could pull into my projects which would give me a way to pull in single javascript files, directories, urls and then combine, minify, and even compress those files? Yes, of course that would be cool! And since I was pretty much snowed in on Saturday, I set out to create this little utility that I call JavaScript Bundler. Pretty clever name, huh?

So what features does JavaScript Bundler have currently?

- It can pull in any number of JavaScript files by specifying their names.

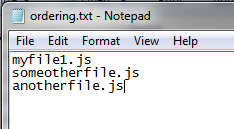

- It can pull in entire directories of JavaScript files by specifying the directory. You can also put a ordering.txt file in the directory which will contain a list of the file names in the order that you want them concatenated. Remember, ordering can be important when you are importing JavaScript!

- It can pull in JavaScript files from urls, so if you had a library which was hosted somewhere that you wanted to pull in, you can easily do so.

- You can pull in any combination of the above three file types and combine and compress them into the same file.

- You can optionally minify the combined JavaScript using either jsmin or Google’s Closure compiler. The YUI Compressor is currently included in the project, but has not been implemented. I am open to implementing other minifiers/compressors as well.

- You can optionally output pre-gzipped versions of your files in case you are using a CDN which does not support gzipping files natively. This way you can direct users to the gzipped files if their browser supports it.

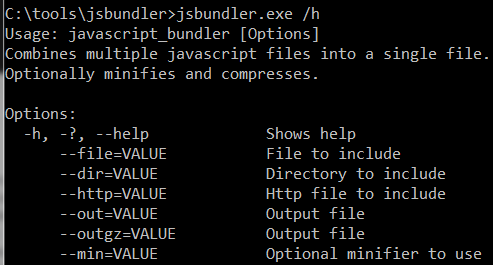

Let’s take a look real quick at how it works. First, we can access the JavaScript Bundler’s help by passing "/h" (if you’re wondering what I am using for parsing command line parameters, well, that is the wonderful Mono.Options library which is written by my friend Jonathan Pryor):

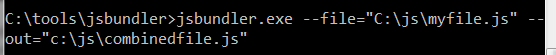

Now you can see that if we want to specify a single JavaScript file to include, we just pass it using the "–file=" parameter and then we can specify the output via the "–out=" parameter. It would end up looking like this:

This would actually just output the contents of myfile.js into combinedfile.js because we aren’t specifying a tool with which to perform the minification. If we wanted to use jsmin we could specify it by passing the "–min=" parameter:

![]()

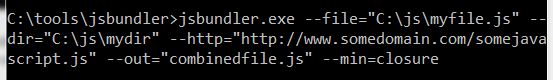

And if we wanted to pull in a file, a directory, a url, and then compress all of it using the Google Closure compiler (which requires Java to be installed and on the path) we could do this:

And remember, when we pull in that directory we might want to specify the order in which we concatenate the files. We can do this by adding a file called ordering.txt and placing it in the directory. It might look something like this:

Overall I’d like to keep this utility fairly simple, and with a few minor exceptions it already fills the needs that I set out to fill. So, what are those exceptions, what features do I want to add to it?

- More console output (with quiet option). It doesn’t really tell you what it is doing currently.

- Error handling. It doesn’t really check much right now.

- Ability to specify file masks when pulling in a directory.

- Use a config file to specify files, compression methods, etc… instead of passing items as command line parameters. I think this would give a bit more flexibility.

- Implement the YUI Compressor.

- Combine and compress css files as well.

- Maybe have an option to pull in standard libraries. Not sure how to work this out with versioning though.

- Anything great ideas that you guys have!

I hope that if you get a chance you’ll check it out and let me know what you think. Also, if you have any great ideas for changes to make, or you want to help out, please leave a comment! Thanks!

Download v0.1 of the JavaScript Bundler and if you want the (super ugly) source just grab it from the JavaScript Bundler repository over on GitHub.

Loved the article? Hated it? Didn’t even read it?

We’d love to hear from you.

You might like to check out http://github.com/mvccontrib/MvcContrib/tree/master/src/MvcContrib.IncludeHandling/ and http://github.com/mvccontrib/MvcContrib/tree/bf72e4b0a80b313a2ce72eddeb93b7b365ac7f0e/src/Samples/MvcContrib.Samples.IncludeHandling

Justin, why not use "the bullet train" of JS and CSS compression? http://www.codeplex.com/shinkansen *cough* *cough*

Why use the "bullet train" when you can use the "space shuttle" of JS compressors? 🙂

As long as it’s not the Amtrak of JS compressors. 😀

This is a really complex space and there are a lot of different options and approaches depending upon the environment and your needs.

Would you like something that you can actually debug in your dev workflow? If so, then perhaps you should take a look at "script includers" like LABjs (which requires "cascades" of loads in a script block, instead of manual includes) .. or Machine.Includer, which allows you to specify your dependencies on a script-by-script basis (the way you would with server side code), and then resolve/load it up at request time… both of these options aren’t realistic for performance sensitive scenarios (like production), so we come back to…

Script bundling. Your post and tool provide (one) way to approach this issue. Another is the above-linked shinkansen tool. Really, I think we have to (sigh, once again) take a page from the Ruby playbook and look at some of their tooling approaches, like Sprockets and (especially) Jammit. They are really awesome, IMO (and Shinkansen is in line with them, but I don’t personally jive with their approach.. it can be integrated w/ MVC.. but is pretty fugly).

My major gripe with your tool is that if I have my own scheme for figuring out a piece of markup’s dependencies, then jsbundle.exe is redundant, period (esp. if using the closure compiler which has pretty much the same syntax on the CLI). So the real "value add" would be if you spelled out a convention to specify dependencies (like Sprockets ‘// require’ syntax) and then did that scanning for users when jsbundle.exe is ran, and then did the packaging.

Like, in your .aspx files (which get scanned), you have:

<script type="text/javascript">

// you can do..

// require /static/scripts/jquery.js

// require /static/scripts/dep1.js

// or something like

include(‘/static/scripts/jquery.js’);

include(‘/static/scripts/dep1.js’);

</script>

The jquery.js dep speaks for itself, but look ing at dep1.js, we might have something at the top of the file like:

// require /static/scripts/dep2.js

// or

include(‘/static/scripts/dep2.js’);

This would provide the basis for a tool/script to recursively scan through these files and build up a dependency tree which it can then flatten and remove dups from. You then get an ordered file list which can be passed to the compiler, et al.

I personally already do this (and then execute Google Closure Compiler) using a hand-rolled scanner (python script) as a part of my own build process. So there’s not really anything for me in this tool, atm. It might be something that you could look at to add some value, though. It’s not too crazy. I also think there’s some real value in the server-caching based approaches in tools like Jammit or Shinkasen.

It’s funny that this post happened at this time. This and similar issues have been floating around a lot lately on sites like Ajaxian and Daily.js, as well. I’m also prepping to give several talks on this topic at upcoming code camps in the Northwest.

@Jeff Thanks for your thoughtful comments, and I agree that if you are taking the approach of automating this process using the Closure compiler, then this tool adds little value to your workflow.

On the other hand though, my goal was to provide a tool which can be extended further to allow for many scenarios, such as bundling and minifying for production, but also being able to deploy debug versions of files. I also wanted to have a tool which will support css compression as well.

In the end I do want to expose the code behind the command line tool as a set of libraries that could be leveraged within an application. In which case it could be leveraged to do something like scanning and replacing of javascript files during a build process or maybe even at runtime.

Again, thanks for the ideas.

Nice post, Justin! We should take the same steps for CSS files. I posted a way of getting started with this line of thinking in ASP.NET MVC: http://www.curtismitchell.com/2b/?p=38

It is not as complete as what you have presented, but it shows a different approach to combining static plain text assets.

@Curtis Yeah, I definitely want to do the same thing for CSS files. Your solution look very good in the way that it easily integrates into the ASP.NET MVC application. I added some asp.net support for my tool, you can check it out here: http://www.codethinked.com/post/2010/02/11/Bundler-Part-2-ASPNET-Integration.aspx

It doesn’t take the same route that your solution does, it is still generating a physical file.

I cannot download JSBundler.exe

The download page become squashit. Need help!

@Daniel JsBundler has been renamed to SquishIt, and the tool has been changed a bit. If you want a command line tool to combine js files, I would check out ajaxmin or YUI compressor on Codeplex.